Robots Will Never Replace Humans

by Sylvia Engdahl

Robots may become more intelligent than humans, but intelligence is not the only aspect of the human mind.*

The development of artificial intelligence (AI) is proceeding rapidly. We will soon have all the devices discussed in my essay "The Future of Being Human" (in my book by that title) and far more; the assistance of AI will bring about profound changes in industry, medicine, and government as well as in the convenience of everyday life. Much will become possible that is beyond our present ability to accomplish. Eventually we will have self-replicating robots to mine extraterrestrial resources, build settlements on other worlds, and explore distant stars, work that would be difficult if not impossible without them.

AI will be able to do many things better than people can, including some that demand capabilities once thought to be exclusively human. But in my opinion it's not going to act by volition, and it's not going to make autonomous decisions about tasks not given to it, no matter how intelligent it is. Intelligence in itself does not imply free will. Some philosophers don't believe even humans have free will, but assuming that we do, it is a major distinction between the human mind and AI.

Furthermore, AI will rarely take the form of artificial beings, despite their popularity in science fiction. I don't think humanoid robots or androids will be produced except on a small scale for research or entertainment purposes. What would be the advantage? Robots in sci-fi films are cute, and robocops provide spectacularly violent screen action, but there is no good reason to take them any more literally than figures like Batman and Superman. The functions robots serve can better be done by specialized ones that aren't shaped like people. The idea of humanoid robot servants and/or workers is comparable to the ancient idea that men will someday fly with artificial wings--both are based on obsolete concepts. We don't need pseudo-people to do menial jobs when we have automated equipment, any more than planes need to look like birds.

Nor do we need robot police or soldiers. Crime in the future will be controlled not by SWAT teams but by sophisticated electronic surveillance and perhaps by remote immobilization of suspects for automated pickup. Wars will not be fought by ground combat, and as far as air combat is concerned, we already have drones. At present ground-based pilots control the drones; mightn't we replace them with robot pilots? Of course not--by the time we can produce a robot that can pilot a distant plane remotely, it will be possible for generals to command all the drones, plus various other devices not yet invented, from one AI-equipped room at headquarters. No artificial beings will be required.

Isaac Asimov's robot stories, whch attributed emotion to robots, were influencial in forming public feeling about them.

Nevertheless, there is a long tradition of personifying AI that influences current thought about it. Not only are fictional robots given names and genders, but they generally use the first person when speaking, whether human-shaped or not; even software such as Cortana refers to itself as "I." The character Data in Star Trek is shown to have emotions even though he claims to lack them, and since he's portrayed by a human actor, audiences are convinced that he's no mere machine. Children's stories, too, give robots feelings, a practice I've always considered emotionally misleading except where the fantastic intent is as unmistakable as it is with R2-D2. The proper designation for such fantasy is "pathetic fallacy," a literary term for the attribution of human emotion and conduct to things that are not human. But people have been conditioned to believe that conscious robots may become more than fantasy, and scientists--who themselves have grown up under this influence--are prone to agree.

Personally, I see no reason why AI ought to be conscious, let alone have emotions. The usual explanation given for the attempt to emulate the human mind is that ordinary robots, humanoid or not, are specialized and can perform only functions for which they have been specifically programmed, whereas we need generalized AI, now usually referred to as AGI, that is more versatile. We want robots with the ability to handle new situations that haven't been planned. Yes, but does this require consciousness, self-awareness? On one hand, some scientists say it does; on the other hand they claim that given access to enough data, such as the entire content of the Internet, a superintelligent machine could correlate more of it than any human could and take whatever action was necessary to achieve its goals. But this is true simply because manipulation of data is something for which a computer is better suited than a human. People have an advantage over superintelligent computers in handling the unexpected because we have experience--accumulated data--that computers presently lack, not because we are conscious. A conscious person without any applicable experience, such as a young child, would have no advantage, at least not in a practical sense.

The value of consciousness lies in something science cannot define. The idea that it could exist in artificial beings or networks arises from the assumption that human beings are no more than biological machines. Because we don't understand what else they are, scientists insist that the physical brain is the sole source if it, despite all the evidence of human experience to the contrary. Some believe that consciousness will somehow emerge if an artificial brain is made complex enough, which is just another way of saying the brain is all that matters. It is on a par with the notion that human minds could be uploaded to computers and thus attain immortality, which some people now believe to the extent of having their brains cryonically preserved after their deaths--a sad example of how deeply the philosophy of materialism now permeates our culture.

I maintain that the underlying reason for the effort to produce conscious robots is that AGI designers feel a need to reassure themselves about the adequacy of their materialistic premises (though they may not be aware of this motive). There are surely enough hints of alternatives in today's society to make diehard materialists uneasy. Emotionally, doubt is more upsetting to them than the prospect of the Singularity, a hypothetical point at which artificial intelligence will become more intelligent than humans and advance exponentially beyond our ability to foresee or control. Many futurists even welcome that prospect.

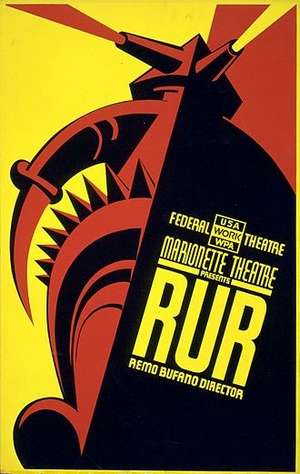

Poster for a 1939 New York production of the play R.U.R.

Unlikely as it is that the Singularity will ever happen, it has become fashionable to think that AGI may eventually supplant human beings. The idea that robots might someday take over the world and kill off humans has been around since Czech author Karel Capek introduced the word "robot" (derived from a Czech word meaning compulsory labor) in his 1920 play R.U.R. The robot takeover in the play was meant as an allegory about the exploitation of human workers, but since then many people, including some AGI researchers, have taken the idea seriously. In fact, scientific celebrities such as Stephen Hawking, Elon Musk, and Bill Gates actually believe that advances in AGI will result in self-aware robots that may seek to destroy us. Dr. Hawking told the BBC, "The development of full artificial intelligence could spell the end of the human race."

I don't think we need to worry about this, as I feel sure that conscious AGI is inherently impossible. What dismays me is that scientists who believe it could happen are working to produce such robots anyway. I hope their acknowledged motive is simply that if they don't do it someone else will, which unfortunately is true. It would be disastrous if only nations bent on conquest had superintelligent AGI, and it wouldn't need to be conscious to do a lot of harm.

The scenario of robot takeover depends on the assumption that AGI would act autonomously either with the desire to rule, or upon deciding that humans were "useless." Useless for what? AGI will have no criteria for usefulness except in regard to the goals it is designed to achieve. To be sure, if intended as a weapon it could tasked with killing humans, but the danger of its killing everyone comes in the same class as the danger of annihilation by some other weapon of mass destruction--the human race will not be fully safe until we have established offworld colonies. But there is no reason to fear that superintelligent AGI would in itself be malevolent.

"Some people assume that being intelligent is basically the same as having human mentality," says computer expert Jeff Hawkins in his book On Intelligence. "They fear that intelligent machines will resent being 'enslaved' because humans hate being enslaved. They fear that intelligent machines will try to take over the world because intelligent people throughout history have tried to take over the world. But these fears rest on a false analogy. . . . Intelligent machines will not have anything resembling human emotion unless we painstakingly design them to." And emotion produced by design would be no more than a simulation. Therein lies the fallacy of the doomsayers' premise. The underlying reason why materialists think intelligent AGI would "take over" is because they do assume intelligence implies human mentality--they have no choice but to assume it, since they believe that human mentality is nothing more than intelligence.

Of greater import than hypothetical danger from self-aware AGI is the mere fact that eminent experts such as Hawking, Musk, and Gates believe that it will exist. Their confidence in the inevitability of its creation goes to show that if and when efforts to produce conscious robots fail, it won't be because of inadequate technology. And therefore it will be strong, perhaps conclusive, evidence that there's indeed some aspect of human minds that materialism can't explain.

Copyright 2020 by Sylvia Engdahl

All rights reserved.

This essay is included in my ebook The Future of Being Human and Other Essays